Is GPT-4 General AI?

I read the 154 pages newest bombshell paper from Microsoft so you don't have to (but you should!). Honestly, looking around the cafe I am writing this from, I am surprised people are not freaking out

For the past couple of weeks together with Robert Chandler we have been playing with LLMs with an added sprinkle of giving them access to different tools and APIs. We were fascinated by the ability of the models to try different things and learn. We both have been in the field of ML/AI since 2014 and a thought started to dawn on us: "Is this the beginning? Are we actually getting to General Intelligence?"

Looking at “Sparks of Artificial General Intelligence"(https://arxiv.org/pdf/2303.12712.pdf), I think we are getting there. Here is a summary, see below for examples

Paper is solely based on people playing with GPT-4 (in an unrestricted version, meaning no limitations from OpenAI)

First of all, when asking simple dumb questions I thought that ChatGPT is comparable to GPT-4, very wrong.

ChatGPT in my mind was a better search tool

GPT-4 in many ways is a better, more helpful, human it can:

Use external tools and APIs and learn from mistakes when using them. Using tools was a step change for humans and I think it is the same for AI

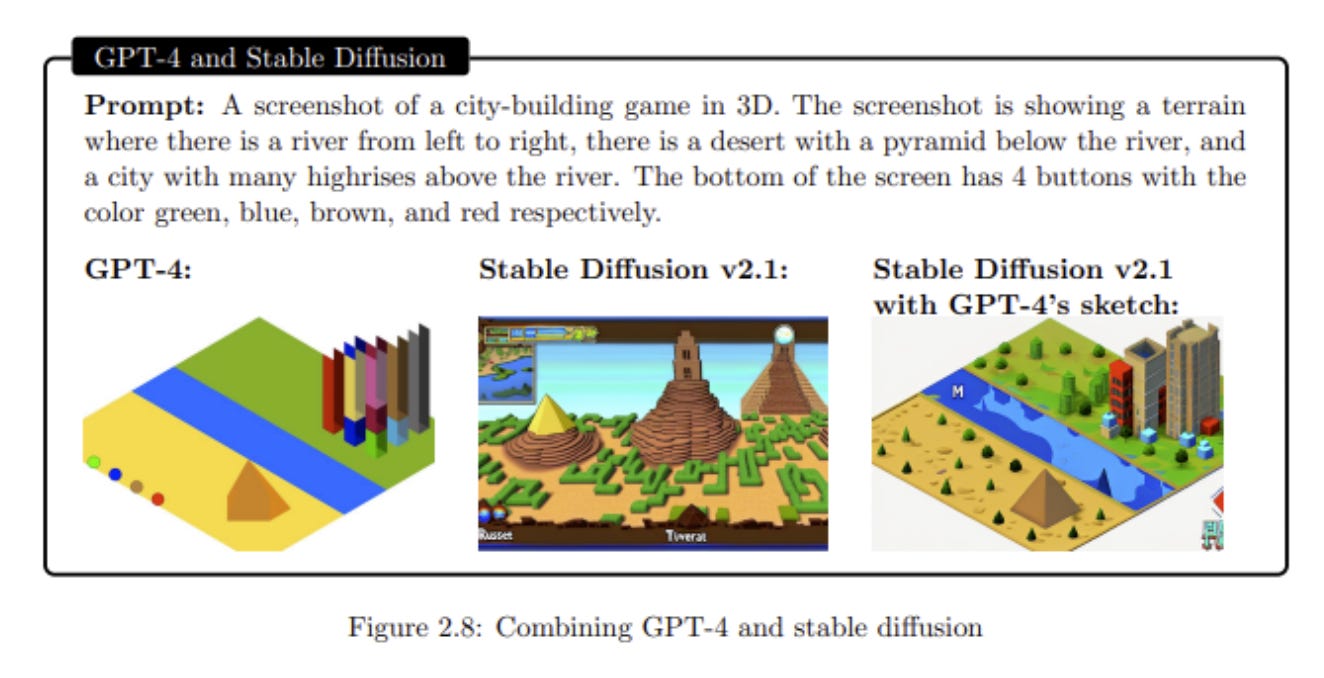

Input and output images. These are simple 2D and 3D SVGs which can be fed into for example stable diffusion to generate a better-quality image:

Think logically — see below for its ability to solve problems from the International Mathematics Olympiad

Plan out actions and use external tools see below for an example of using a calendar and email to schedule meetings

Become your virtual handyman.

Hack devices on your local network (!)

Understand people’s beliefs and intentions (‘theory of mind’) — this is very important as even when provided with limited information it can guess the intention

Answer Fermi questions — they are known to be very difficult to answer and I have used them in many interviews. For example: “How many piano tuners are there in Chicago?”

Now about its limitations

It still sucks at jokes and this is an important limitation meaning it cannot “see” forward. See this simple example when I ask it to predict how many words will it output in the response to that question. This means its not good at discontinuous tasks

“Examples of discontinuous tasks are solving a math problem that requires a novel or creative application of a formula, writing a riddle, coming up with a scientific hypothesis or a philosophical argument”

It still is a very intelligent Golem following orders but scientists are already thinking about “Equipping LLMs with agency and intrinsic motivation is a fascinating and important direction for future work”. This is also massively dangerous. Think about saying that it should be good and then it looks at the definition of good and wipes all of the humans cause we are not good for our planet…

And lastly, we have no clue what is actually happening! How does it reason, plan, and create? In the end, a Large Language Model is a probability distribution of the next words based on the previous words. The fact that we got where we are by just looking at more data and training it more blows my mind. Maybe we’re all just stochastic parrots after all…

What’s next? I think the next thing that LLMs are gonna have to deal with is Memory. “Any deterministic language model that conditions on strings of bounded length is equivalent to a finite automaton, hence computationally limited.” Add a way to consolidate its experience over time (without the need for re-training)

I am scared, excited and ready to build.